AI information retrieval: A search engine researcher explains the promise and peril of letting ChatGPT and its cousins search the web for you

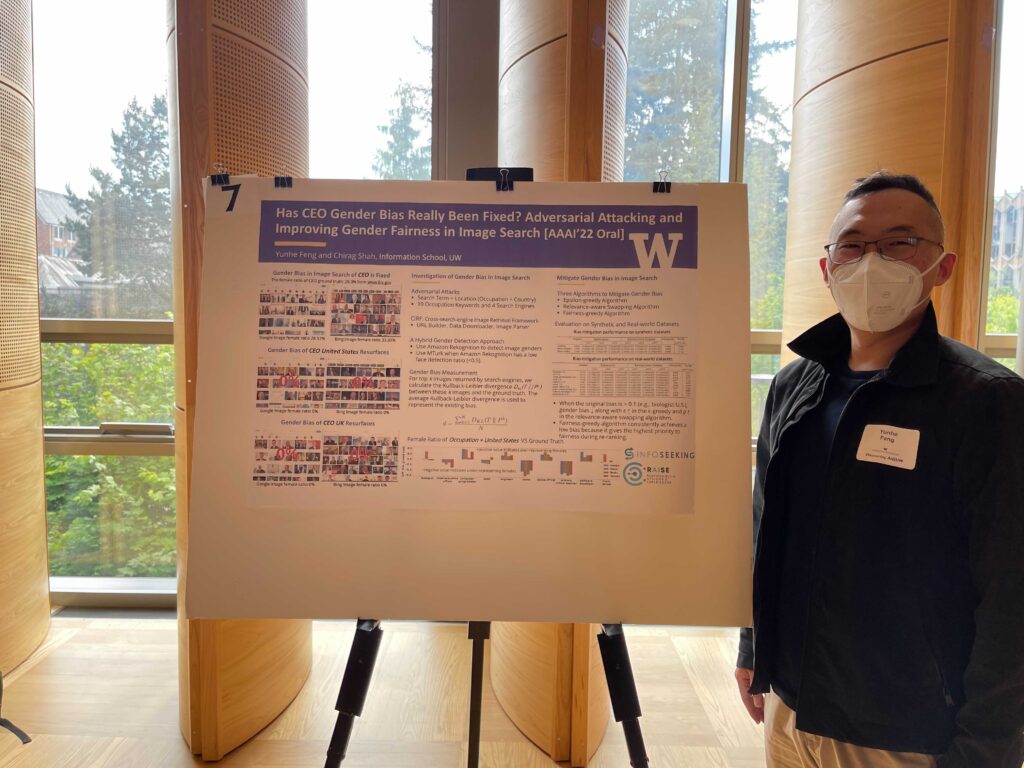

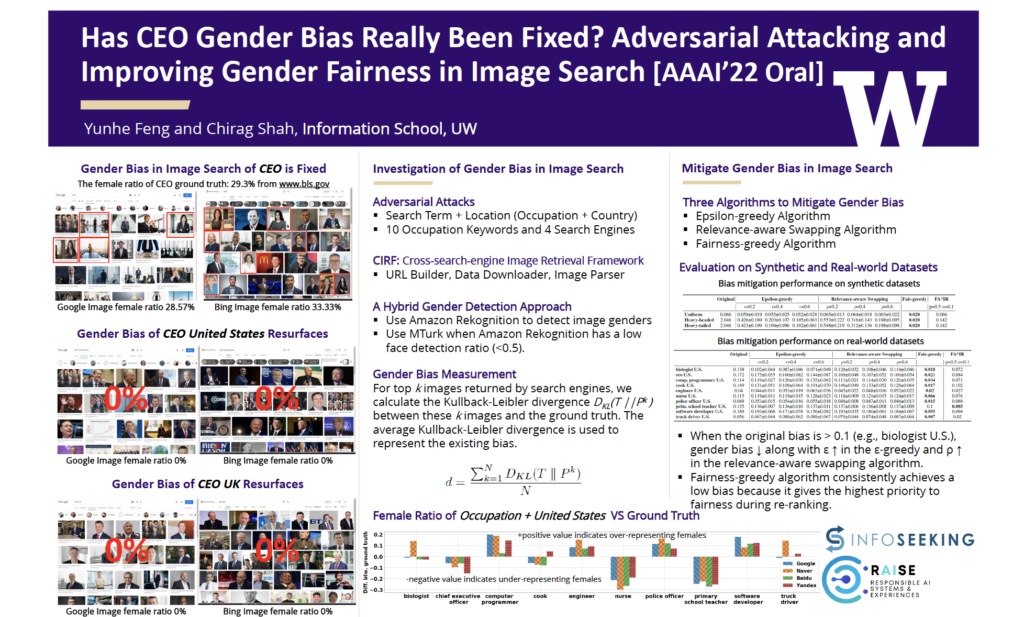

Author: Chirag Shah Professor of Information Science, University of Washington

The prominent model of information access before search engines became the norm – librarians and subject or search experts providing relevant information – was interactive, personalized, transparent and authoritative. Search engines are the primary way most people access information today, but entering a few keywords and getting a list of results ranked by some unknown function is not ideal.

A new generation of artificial intelligence-based information access systems, which includes Microsoft’s Bing/ChatGPT, Google/Bard and Meta/LLaMA, is upending the traditional search engine mode of search input and output. These systems are able to take full sentences and even paragraphs as input and generate personalized natural language responses.

At first glance, this might seem like the best of both worlds: personable and custom answers combined with the breadth and depth of knowledge on the internet. But as a researcher who studies the search and recommendation systems, I believe the picture is mixed at best.

AI systems like ChatGPT and Bard are built on large language models. A language model is a machine-learning technique that uses a large body of available texts, such as Wikipedia and PubMed articles, to learn patterns. In simple terms, these models figure out what word is likely to come next, given a set of words or a phrase. In doing so, they are able to generate sentences, paragraphs and even pages that correspond to a query from a user. On March 14, 2023, OpenAI announced the next generation of the technology, GPT-4, which works with both text and image input, and Microsoft announced that its conversational Bing is based on GPT-4.

Thanks to the training on large bodies of text, fine-tuning and other machine learning-based methods, this type of information retrieval technique works quite effectively. The large language model-based systems generate personalized responses to fulfill information queries. People have found the results so impressive that ChatGPT reached 100 million users in one third of the time it took TikTok to get to that milestone. People have used it to not only find answers but to generate diagnoses, create dieting plans and make investment recommendations.