People can’t identify COVID-19 fake news

A recent study conducted by our lab, InfoSeeking Lab at the University of Washington, Seattle shows that people can’t spot COVID-19 fake news in search results.

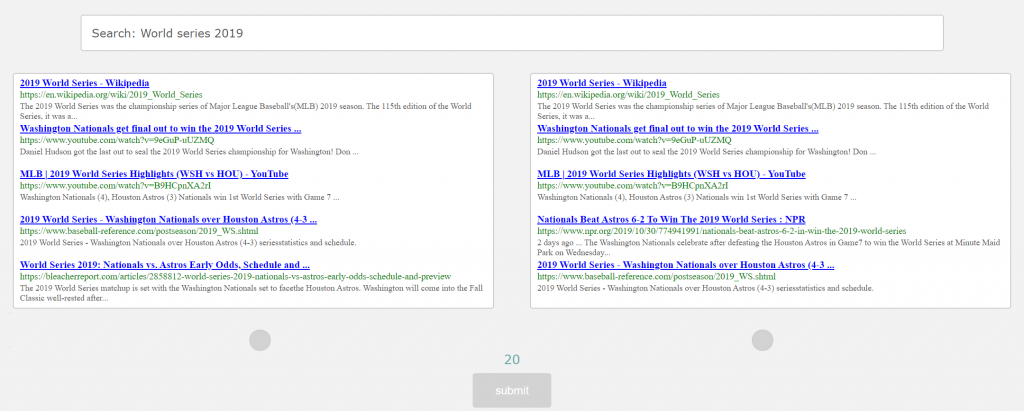

The study was done by having people choosing between 2 sets of top 10 search results. One is direct from Google and another has been manipulated by putting one or two fake news results in the list.

This is a continuing study from prior experiments from the Lab in a similar setting but with random information manipulated in the top 10 search results. The outcomes are all in the same directions, people can’t tell which search results are being manipulated.

“This means that I am able to sell this whole package of recommendations with a couple of bad things in it without you ever even noticing. Those bad things can be misinformation or whatever hidden agenda that I have”, said Chirag Shah, InfoSeeking Lab Director and Associate Professor at the University of Washington.

This brought up very important problems that people don’t pay attention to. They believe that what they see is true because it comes from Google, Amazon, or some other system they use daily. Especially in prime positions like the first 10 results as multiple studies show that more than 90% of searchers’ clicks concentrate on the first page. This means any manipulated information that is able to get into Google’s first page of search results is now being perceived as true.

In the current situation, people are worried about uncertainty. A lot of us seek updates about the situation daily. Google is the top search engine that we turn to. People need trustworthy information; however, there are many who are taking advantage of people’s fear and spreading misinformation for their own agenda. What would happen if the next fake news said that there is a new finding that the virus has mutated with an 80% fatal rate, what would it do to our community? Would people start to usurp for food? Would people wearing a mask in the public be attacked? Would you be able to spot the fake news? The lab is continuing to explore these critical issues of public importance through their research work on FATE (Fairness Accountability Transparency Ethics).

For this finding, InfoSeeking researchers analyzed more than 10,000 answers on both random and fake information manipulated in the list, involving more than 500 English-speaking people around the U.S.